A.I.

Effective AI inferencing for embedded systems

MANAGEMENT

ACTIVATED

ASSISTANTS

LIGHTING

SYSTEMS

HVAC

MAINTENANCE

Key features

Programmable Performance

Programmable Performance

- 256-bit vector processing unit with 100GOPS int-8 AI inferencing performance.

- No heterogeneous communication overhead.

- Supports binarized networks, achieving over 800GOPS.

- 1MB on-chip SRAM supporting AI models with up to ~800kB tensor arena requirement.

- Weights stored in external flash memory. Large on chip memory maximizes AI model inference performance.

Easy to use

Easy to use

- XMOS AI Tools allow deployment of AI Models developed in Python-based environments like PyTorch and Tensorflow.

- Models are optimized for embedded use with Tensorflow Lite with high performance operators that exploit the xcore.ai capabilities and minimize tensor arena sizes.

- Generated C code can be integrated with other embedded functions allowing xcore.ai to efficiently handle image-based and audio-based applications.

- While most embedded AI apps use 8-bit quantization, xcore.ai also supports binarized networks and larger datatypes (e.g., floating point) where needed.

Flexible & Scalable

Flexible & Scalable

- Unique design with 16 independent hardware threads per device

- Threads can be used independently or collaboratively.

- Supports a diverse range of functions, including; AI inferencing, signal conditioning, I/O and control

- High-speed communication between threads and between devices provides:

- Scalability of performance and memory.

- Concurrent implementation of high-performance inferencing alongside other functions

Technical

| Part Number | Package | IO voltage | IO | External Interfaces | Datasheet | Buy |

|---|---|---|---|---|---|---|

| XU316-1024-QF60A | 60pin QFN (7x7mm) | 1v8 | 34 | USB | DATASHEET | |

| XU316-1024-QF60B | 60pin QFN (7x7mm) | 3v3 | 34 | USB | DATASHEET | |

| XU316-1024-FB265 | 265pin FBGA (14x14mm) | 1V8 / 3V3 | 128 | USB, Single or Dual lane MIPI D-PHY receiver, LPDDR1 | DATASHEET | |

| XU316-1024-TQ128 | 128pin TQFP (14x14mm) | 1V8 / 3V3 | 78 | USB, Single or Dual lane MIPI D-PHY receiver | DATASHEET |

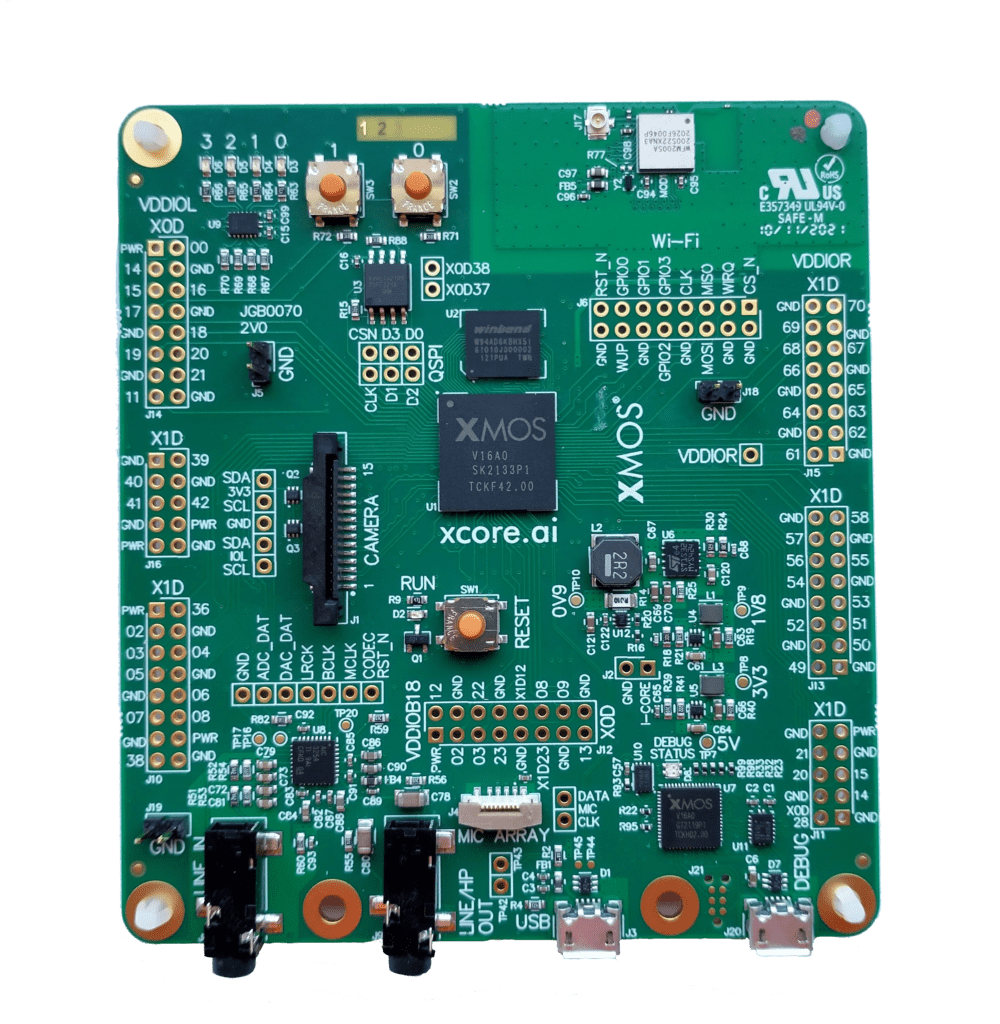

Start your development with the

XCORE.AI

EVALUATION KIT

The xcore.ai evaluation kit allows testing in multiple application scenarios and provides a good general software development board for simple tests and demos.

Our kit includes

- the xcore.ai crossover processor

- 4 general purpose LEDs

- 2 general purpose push-button switches

- a PDM microphone connector

- audio codec with line-in and line-out jack

- QSPI flash memory

- LPDDR1 external memory

- 58 GPIO connections from tile 0 and 1

- micro USB for power and host connection

- MIPI connector for a MIPI camera

- xSYS2 connector for debug adapter

- a reset switch with LED to indicate running.

Developer Resources

| Title | Version | Date | Download |

|---|---|---|---|

| The XMOS XS3 Architecture | 2025-03-06 | html | |

| Tools 15 - Documentation | 15.3.1 | 2025-10-01 | tgz |

| XU316-1024 xcore.ai Datasheet | 2.0.0 | 2025-01-13 | html |

| XU316-1024-FB265 Datasheet | 2.0.0 | 2025-01-13 | html |

| XU316-1024-QF60A Datasheet | 2.0.0 | 2025-01-13 | html |

| XU316-1024-QF60B Datasheet | 2.0.0 | 2025-01-13 | html |

| XU316-1024-TQ128 Datasheet | 2.0.0 | 2025-01-13 | html |

| xcore.ai FB265 package port map | 1.0 | 2025-03-11 | xls |

| xcore.ai I/O Timings | 2024-10-21 | html | |

| xcore.ai Package port map | 2024-04-03 | xls | |

| xcore.ai Product brief | 7.0 | 2025-06-26 | |

| xcore.ai QF60A/B package port map | 1.0 | 2025-03-11 | xls |

| xcore.ai TQ128 package port map | 1.0 | 2025-03-11 | xls |

| Title | Version | Date | Download |

|---|---|---|---|

| XU316-1024-FB265 BSDL File | 1.0 | 2025-02-04 | bsd |

| XU316-1024-QF60 BSDL File | 1.0 | 2025-02-04 | bsd |

| XU316-1024-TQ128 BSDL File | 2025-02-10 | bsd | |

| xcore.ai Evaluation Kit Altium and Manufacturing files | 2.0 | 2022-07-19 | zip |

| xcore.ai Evaluation Kit Schematics | 2.0 | 2022-07-26 | |

| xcore.ai Evaluation Kit v2.0 hardware manual | 2V0 | 2024-02-12 |

Programmable Performance

Programmable Performance